Predator UAV on Steroids

The Drudge Report points this morning to an article on the MQ-9 "Reaper."

The airplane is the size of a jet fighter, powered by a turboprop engine, able to fly at 300 mph and reach 50,000 feet. It's outfitted with infrared, laser and radar targeting, and with a ton and a half of guided bombs and missiles.The Reaper is loaded, but there's no one on board. Its pilot, as it bombs targets in Iraq, will sit at a video console 7,000 miles away in Nevada.

...

The Reaper's first combat deployment is expected in Afghanistan, and senior Air Force officers estimate it will land in Iraq sometime between this fall and next spring. They look forward to it.

"With more Reapers, I could send manned airplanes home," North said.

The Reaper can fly twice as fast and twice as high as the Predator. Instead of two missles, it can carry 14 air-to-ground weapons or two 500 pound bombs.

The infantry love these things. Predators provide real-time video of the operational area. Reapers will do that plus provide significant "kinetic" air support. Imagine the destructive potential of a single sniper with a laser, backed by a Reaper.

Phil recently pointed to an article on the development of autonomous TASER-equipped robots for security duty. Phil commented that this was "how the Cylons got their start." I agree that using autonomous robots for violence is a bad idea. If accepted it would set a dangerous precedent. The famous man-trap case of Katko v. Briney, stated "the law has always placed a higher value upon human safety than upon mere rights of property." That case involved the use of a lethal spring-gun, but the issues are similar.

I'm much less troubled by UAV's. These units are not autonomous - they are controlled by humans. Since the pilot's actions take place in a secure facility, the opportunity exists for greater oversight. Everything that the pilot does is recorded and his commanding officer could be standing right next to him. Under those circumstances, there's not much chance for a pilot to go rogue.

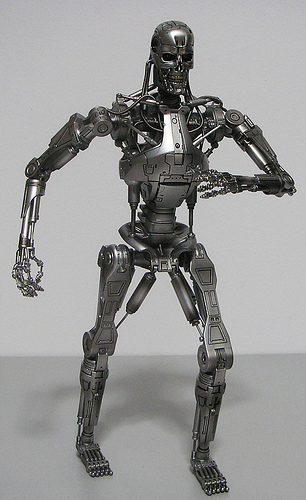

It doesn't take a lot of imagination to see where this technology is going. UAV's could end up looking like F-22's and our infantry could look like this:

Or even this:

For argument's sake let's say that we avoid the risk of Terminator/Cylon overthrow because these units are always controlled by humans - is this a good or bad development?

Comments

Stephen

Personally (Cylon comments notwithstanding), I think having them always guided by humans subjects them to unnecessary limitations. What do you do when a fully automated system is better at targeting the enemy, avoiding civilian casualties, and preventing friendly fire than a system with a human being behind the wheel? The assumption that human beings will always have better judgment than machines might have a shorter lifespan than you imagine -- especially in a world where cops blow people away for reaching for their keys or holding a soda can. Plus, what do you do when the enemy starts using fully automated systems?

Posted by: Phil Bowermaster![[TypeKey Profile Page]](http://www.blog.speculist.com/nav-commenters.gif) |

July 16, 2007 08:03 AM

|

July 16, 2007 08:03 AM

One other thought -- when deployed for war, I think unleashing a fully automated armed robot is more like lobbing a grenade (an extremely dangerous and effective grenade) than it is setting one's personal property with a booby trap.

Posted by: Phil Bowermaster![[TypeKey Profile Page]](http://www.blog.speculist.com/nav-commenters.gif) |

July 16, 2007 08:05 AM

|

July 16, 2007 08:05 AM

This post I think gets things a bit backwards. UAVs are going to be autonomous much sooner than UGVs (g=ground). Obstacle avoidance, path planning, localization, and perception are all easier.

In addition, as far as target detection, identification, and engagement, it will be easier to do it autonomously from a UAV.

Looking at these problems outside the context of the particular engagement is a mistake.

A war between the US and China is totally different than the current Global War on Terror.

This distinction will last for some time. You will have wars between well established parties. A war like this hasn't happened in some time.

You will also have wars between independent actors and nation-states.

In the former, the situation is closer to a total war. In the latter, precision to spare the vast majority of people is the most important aspect.

This is all clearly a very good development, for the same basic reason I mentioned on the other thread. The alternative to robots is humans. Whether the robots has policy-level instructions, or low-level instructions, humans are really in control.

"Imagine the destructive potential of a single sniper with a laser, backed by a Reaper."

How about a remotely controlled UGV on the ground instead of a sniper? The systems work well together.

Posted by: ivankirigin![[TypeKey Profile Page]](http://www.blog.speculist.com/nav-commenters.gif) |

July 16, 2007 12:28 PM

|

July 16, 2007 12:28 PM

Phil,

The assumption that human beings will always have better judgment than machines might have a shorter lifespan than you imagine -- especially in a world where cops blow people away for reaching for their keys or holding a soda can.

That is based on the assumption first that humans don't get accelerated and two that they will be operating under reaction times that preclude them from making good judgements. My take is that in most wars, the high tech participants will have time enough to determine whether something is friend or foe. They probably will often have incomplete information, but a robotic system can't come to a better decision either.

In a war where one or both sides use fully automated systems, then there's good reason to clearly identify these. If one side is attempt to hide their bots inside noncombatant areas, then there won't be much that one can do to avoid innocent loss of life (except to avoid attacking). My take is that due to the sluggishness of the real world, humans (especially tech enhanced ones) will still be able to contribute on a military battlefield. And higher intelligence can still be fooled.

Posted by: Karl Hallowell![[TypeKey Profile Page]](http://www.blog.speculist.com/nav-commenters.gif) |

July 16, 2007 12:29 PM

|

July 16, 2007 12:29 PM

Fourth-generation warfare makes these tools obsolete. This shows the problem when thinking about the future using today's metaphors.

//

Posted by: Steve Gall![[TypeKey Profile Page]](http://www.blog.speculist.com/nav-commenters.gif) |

July 16, 2007 05:57 PM

|

July 16, 2007 05:57 PM

Fourth-generation warfare makes these tools obsolete. This shows the problem when thinking about the future using today's metaphors.

Well, when I read about "4th generation" warfare, they seem to be talking about standard asymmetric warfare which has been going on as long as there's been warfare between parties of unequal strength. IMHO many of these weapon systems will continue to be useful and today's metaphors will be well used.

Posted by: Karl Hallowell![[TypeKey Profile Page]](http://www.blog.speculist.com/nav-commenters.gif) |

July 16, 2007 10:28 PM

|

July 16, 2007 10:28 PM

Karl:

Indeed.

Posted by: Stephen Gordon![[TypeKey Profile Page]](http://www.blog.speculist.com/nav-commenters.gif) |

July 17, 2007 08:15 AM

|

July 17, 2007 08:15 AM

I know Drudge and USA Today and more or less equivalent news sources know everything- but my experience has taught me that stuff like this is always way more advanced in imlementation then is publicly discussed.

In other words- the future is now. And I suspect that these beuties are way more automated- and automateable than is discussed. BTW_ if you didn't see it, I love the photo of the female Captain (pilot/operator) in front of a pc suppossedly runnignt he war. Uh-huh.

Posted by: MDarling![[TypeKey Profile Page]](http://www.blog.speculist.com/nav-commenters.gif) |

July 19, 2007 10:28 AM

|

July 19, 2007 10:28 AM